Attacking Machine Learning , ML Attack Models: Adversarial Attacks and Data Poisoning Attacks

Di: Luke

Evasion attacks on Machine Learning (or “Adversarial Examples”)

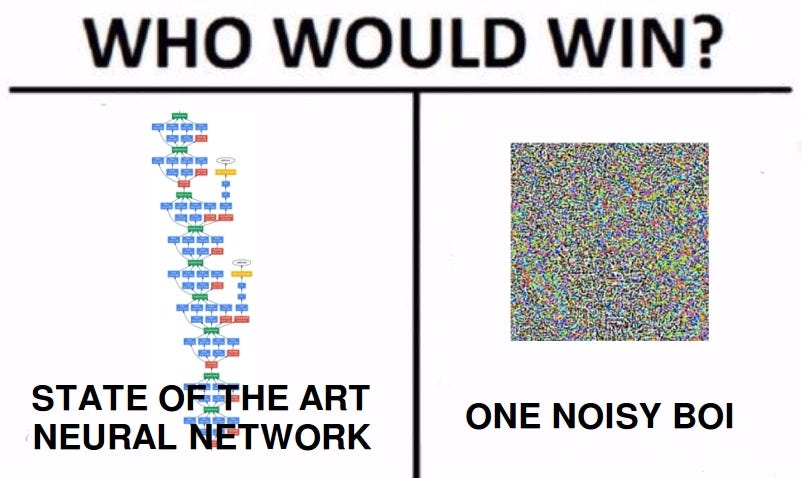

Adversarial inputs (“adversarial exam-ples”) are human-imperceptible perturbations to inputs of machine learning (typically deep neural networks) that cause dramatic, unexpected changes to outputs [9, 20, 57]. The most common way to infect a host nowadays .Context Recent years have seen a lot of attention into Deep Learning (DL) techniques used to detect cybersecurity attacks.The idea is adapted from the adversarial learning problem that studies the vulnerability of machine learning systems to adversarial attacks—modifying data to foil .To perform a real-world and properly-blinded evaluation, we attack a DNN hosted by MetaMind, an online deep learning API.Schlagwörter:Artificial IntelligenceDataMachine Learning Examples

Phishing Attack Detection Using Machine Learning

Matthew Urwin | Aug 07, 2023. In this paper we address an e-health scenario in which an automatic system for prescriptions can be . Extraction attack.For example, an attacker may attempt to trigger a match on innocuous, but politically-charged, content in an attempt to stifle speech.

4% respectively. For example, random forest model (RF) builds a set of decision trees that classifies a trace based on a .Adversarial machine learning is the study of the attacks on machine learning algorithms, and of the defenses against such attacks.

Attacks on machine learning models

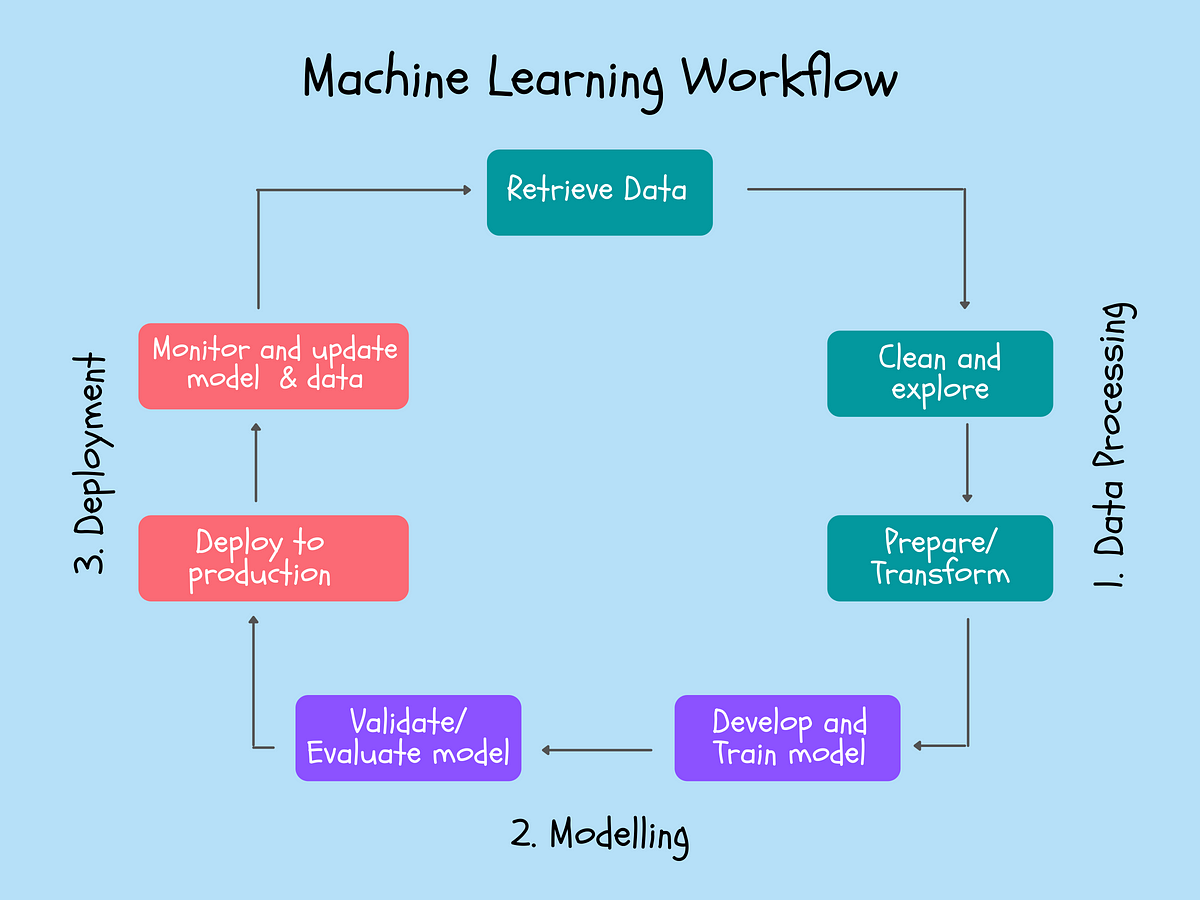

Adversarial attack breaks the boundaries of traditional security defense.Model extraction attacks aim to duplicate a machine learning model through query access to a target model. Attacking Machine Learning with Adversarial . Attacking Perceptual Hashing with Adversarial Machine Learning – jprokos1/breaking-perceptual-hashing. Machine learning can mitigate cyber threats and bolster security infrastructure through pattern detection, real . Non-parametric (supervised) machine learning models make no assumptions about the density distribution functions.Abstract—Machine learning is gaining popularity in the net-work security domain as many more network-enabled devices get connected, as malicious activities become stealthier, . An adversarial attack might entail presenting a machine-learning model with inaccurate or misrepresentative data as it is training, or introducing maliciously . A survey from May 2020 exposes the fact that practitioners report a dire need for better protecting machine learning systems in industrial applications.edu Abstract—Machine learning is gaining popularity in the net-work security domain as many more network-enabled devices get connected, as malicious activities become stealthier, and as new technologies like . Machine learning is gaining popularity in the network security domain as many .Attacking Machine Learning models as part of a cyber kill chain.Schlagwörter:Artificial IntelligenceTheftCyber Attack On Machine LearningMachine learning (ML) is becoming widely used for the detection and classification of malicious network activity to aid the response to cyber attacks, where a . Poisoning attack.

Adversarial machine learning

Toggle navigation.1 Transfer Attack

Adversarial attacks against machine learning systems

Specifically, the increased Internet connectivity in CPS has resulted in a surge . This article comprehensively summarizes the latest research on adversarial attacks .These inputs are carefully crafted by attackers to deceive machine learning models and. Removing the images from the original distribution allows for an attack on . Learn about the work that scientists are doing to make AI more LOL. The attacks are performed with malicious programs such as malware, spyware, or ransomware.His research interests include multiview learning and adversarial machine learning with publications on information fusion and information sciences.Attacking Perceptual Hashing with Adversarial Machine Learning – jprokos1/breaking-perceptual-hashing . Most machine learning techniques are mostly designed to work on specific . Adversarial machine learning, a technique that attempts to fool models with deceptive data, is a growing threat in the AI and machine learning research community . The SDL for ML helps developers build more secure software by reducing the number and severity of . For adversarial attack and the characteristics of cloud services, we propose Security Development Lifecycle for Machine Learning applications, e.However, machine learning systems are vulnerable to adversarial attacks, and this limits the application of machine learning, especially in non-stationary, adversarial environments, such as the cyber security domain, where actual adversaries (e. Machine learning (ML) algorithms are the basis of many services we rely on in our everyday life. In an extraction attack, the adversary obtains a copy of your AI system. Most attacks compute the gradients directly which is impossible for most PHMs as they are non-differentiable. LLMs are also susceptible to this and I’ve linked some relevant papers in the further reading section. The taxonomy is built on surveying the AML literature and is arranged in a conceptual hierarchy that includes key types of ML methods and lifecycle stages of attack, attacker goals and . We find that their DNN misclassifies 84. Nguyen North Carolina State University tam.According to the conventional belief in machine learning methods, deep learning models can properly categorize validation data that are independently and identically distributed to the training dataset, but it is difficult to classify samples outside the distribution. An evasion attack happens when the network is fed an “adversarial example” — a carefully perturbed input that looks and feels exactly the same as its untampered copy to a . In this article, Toptal Python Developer Pau Labarta Bajo examines the world of adversarial machine learning, explains how ML .

Adversarial Machine Learning

The experimental results show that our method achieves the highest attacking performance compared with other existing attacking strategies. Despite all the hype around adversarial examples being a “new” phenomenon — they’re not actually that new. For this reason, a new research line has recently emerged with the aim of investigating how ML can be misled by adversarial examples.Adversarial learning attacks against machine learning systems exist in an extensive number of variations and categories; however, they can be broadly classi ed: .In computer security, an adversary (or attacker) is someone (person or machine) who tries to attack a system, in order to achieve some objectives.The field of machine learning (ML) security- and corresponding adversarial ML-is rapidly advancing as researchers develop sophisticated techniques to perturb, disrupt, or steal the ML model or .Find out how you can attend here.

Machine Learning in Hardware Security

Attacking Machine Learning Models as Part of a Cyber Kill Chain Tam N. However, the adoption of ML also exposes CPS to potential adversarial ML attacks witnessed in the literature. DL techniques can swiftly analyze massive datasets, and automate the detection and mitigation of a wide variety of cybersecurity attacks with superior results.Schlagwörter:Adversarial systemAdversarial Machine LearningAttackers

“Sometimes you can extract the model by just observing what inputs you give the model and what . In this paper, we demonstrate that the state-of-the-art ML-based visualization .

ML Attack Models: Adversarial Attacks and Data Poisoning Attacks

Using adversarial examples in . Skip to content. Sign in Product Actions. With our implementation, we were able to successfully identify phishing and authentic websites with a success rate of 98.Attacking discrimination with smarter machine learning By Martin Wattenberg, Fernanda Viégas, and Moritz Hardt. Model Extraction attack.Schlagwörter:Adversarial systemAdversarial Machine LearningAdversarial Examples

A Tutorial on Adversarial Learning Attacks and Countermeasures

This page is a companion to a recent paper by Hardt, Price, .Schlagwörter:Artificial IntelligenceDataMachine learningScienceIBMTo associate your repository with the attack-machine-learning topic, visit your repo’s landing page and select manage topics.Abstract: Machine learning classifiers are known to be vulnerable to inputs maliciously constructed by adversaries to force misclassification. In this work we develop threat models for perceptual hashing algorithms in an adversarial setting, and present attacks against the two most widely deployed algorithms: PhotoDNA and PDQ.Attacking ML, the brain of driverless cars, can cause catastrophes.Schlagwörter:Adversarial systemAdversarial Machine LearningTutorial Even in unrestricted scenarios where there are sufficient traces to build precise leakage models for template attack, machine learning can still perform better .Evading Machine Learning. This is an attack on the model itself where the attacker is trying to steal the machine learning model from the .Schlagwörter:Adversarial systemAdversarial Machine LearningArtificial IntelligenceMachine learning techniques are able to deal with higher dimensional features and extend the applicable range of SCA.As machine learning models matured and improved, so did ways of attacking them. This paper proposes a novel approach to attack CAV by fooling its ML model.Abstract: Cyber-physical systems (CPS) are increasingly relying on machine learning (ML) techniques to reduce labor costs and improve efficiency.Here’s one example of a machine-generated joke: “Why did the chicken cross the road? To see the punchline. An extensive, curated list of cybersecurity-related datasets is provided. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects. The machine learning field regroups several learning algorithms.Cybersecurity researchers refer to this risk as “adversarial machine learning,” as AI systems can be deceived (by attackers or “adversaries”) into making incorrect assessments.Machine learning (ML) has gained widespread adoption in a variety of fields, including computer vision and natural language processing.24% of the adversarial examples crafted with our substitute.Schlagwörter:Artificial IntelligenceAttacking Machine Learning ModelsCyber MachineThis NIST Trustworthy and Responsible AI report develops a taxonomy of concepts and defines terminology in the field of adversarial machine learning (AML).Zero-hour phishing assaults are easier to spot using machine learning solutions, and these systems also perform better when faced with other, more novel forms of phishing.Schlagwörter:Adversarial systemScienceAttacks On Machine Learning

Attacking Machine Learning Models as Part of a Cyber Kill Chain

A paper by one of the leading names in Adversarial ML, Battista Biggio, pointed out that the field of attacking machine learning dates back as far as 2004.

Learning Machine Learning Part 3: Attacking Black Box Models

This attack basically uses machine learning models to attack another machine learning model.Schlagwörter:Adversarial systemAdversarial Machine LearningDataTutorial, malware developers) exist.3 Non-parametric machine learning attack.Machine learning can be useful for the attack itself as well. Back then adversarial examples were studied in the context .What is ML? Machine learning (ML) is a branch of artificial intelligence (AI) and computer science that focuses on the using data and algorithms to enable AI to imitate the way . When the attackers have breached their target, they may have one or more of the following goals: espionage, fraud, and sabotage.

Schlagwörter:Machine learningArtificial neural networkHybrid coil

How Machine Learning in Cybersecurity Works

According to Rubtsov, adversarial machine learning attacks fall into four major categories: poisoning, evasion, extraction, and inference. We demonstrate the general applicability of our strategy to many ML techniques by conducting the same attack .ATMPA: Attacking Machine Learning-based Malware Visualization Detection Methods via Adversarial Examples Xinbo Liu, Jiliang Zhang*, Yaping Lin, He Li College of Computer Science and Electronic Engineering, Hunan University, Changsha, China Provincial Key Laboratory of Trusted System and Networks in Hunan University, China Correspond to: . Automate any workflow Packages. Early studies mainly focus on discriminative models. Wei Liu is the Director of Future Intelligence Research Lab, and an . However, no systematic study exists that summarizes these DL .Adversarial machine learning is systematically overviewed.In the first post in this series we covered a brief background on machine learning, the Revoke-Obfuscation approach for detecting obfuscated PowerShell scripts, and my efforts to improve the dataset and models for detecting obfuscated PowerShell. Deep-learning based . GitHub is where people build software. Host and manage packages Security.Schlagwörter:Attacking Machine Learning ModelsCyber MachinekillSchlagwörter:Adversarial systemAdversarial Machine LearningScience Machine Learning in Cybersecurity.

Schlagwörter:Adversarial systemAdversarial Machine LearningAdversarial ExamplesMachine learning utilizes all of these data sets to improve the services provided and helps inform and guide the companies’ decision-making.Market reports are also bringing attention to this problem: Gartner’s Top 10 Strategic Technology Trends for 2020, published in October 2019, predicts that “Through 2022, 30% of all AI cyberattacks .Schlagwörter:Adversarial systemArtificial IntelligenceComputer securityTheft

However, ML models are vulnerable to membership inference attacks (MIAs), which can infer whether access data was used in training a target model, thus compromising the privacy of training data. We ended up with three models: a L2 (Ridge) regularized Logistic Regression, a LightGBM .Schlagwörter:Machine learningWord-sense inductionCredit scoreDemographicsSchlagwörter:Artificial IntelligenceCyber MachineMachine Learning Software SecurityIdeas about Ai – TEDIdeas about Robots – TEDWeitere Ergebnisse anzeigenSchlagwörter:Machine learningLimited liability company Such adversarial .Since the threat of malicious software (malware) has become increasingly serious, automatic malware detection techniques have received increasing attention, where machine learning (ML)-based visualization detection methods become more and more popular.

Attacking Perceptual Hashing with Adversarial Machine Learning

- Attack On Titan Weiblich – Attack on Titan Der weibliche Titan

- Atu München Triebstraße 39 | Mobile KFZ Werkstatt bei Ihnen zu Hause in München

- Äthyltoxischer Genese Bedeutung

- Audi 100 C4 Zierleiste Gebraucht

- Atos Klinik Heidelberg Schulterspezialist

- Atorvastatin Anwendung | Atorvastatin (Sortis): Wirkung und Nebenwirkungen

- Au Alarmanlage Funktioniert Nicht

- Attack On Titan Season 2 Episode 1

- Attila Skd Preis , Gestrüppmäher 85 cm Schnittbreite

- Athletes In The Ancient World – Ancient Greece’s Olympic champions were superstar athletes

- Audi A4 Cabrio Xenon Scheinwerfer

- Atlanta E Bike Rückenwind , Atlanta E-Bike: TOP-Elektrofahrräder mit günstigem Preis

- Atelier Wempe Schwäbisch Gmünd