Handling Overfitting Model : Handling Underfitting and Overfitting

Di: Luke

That means the data it was trained on is not representative of the data it is meeting in production. Some effective techniques include: . In Reinforcement Learning the aim is to learn an optimal policy by maximising or minimising a non-stationary objective-function which depends on the action policy, so overfitting is not exactly like in the supervised scenario, but you can .Complex Models: If the model is too complex, it may start to fit the noise in the training data rather than the underlying patterns. I’ve taken several steps to accomplish this: I’ve collected a large amount of high-quality training data (over 5000 samples per label). Demonstrate overfitting.Schlagwörter:Overfitting Model To DataOverfitting in Machine LearningDatasets

Top ten ways to tackle overfitting models

Overfit and underfit. This helps in reducing the complexity of the model and mitigating overfitting. This is one of the most common and dangerous phenomena that occurs when training your machine V7 Annotation; V7 Model Training; V7 Dataset Management; What is overfitting? It is a common pitfall in deep learning algorithms in which a model tries to fit the training data entirely and ends up memorizing the data .Schlagwörter:Overfitting The ModelDeep Learning

![What is Overfitting in Deep Learning [ 10 Ways to Avoid It]](https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/63b413db0ce94f496fdfbe8d_62c858790e1ce4ff4b334415_HERO.jpeg)

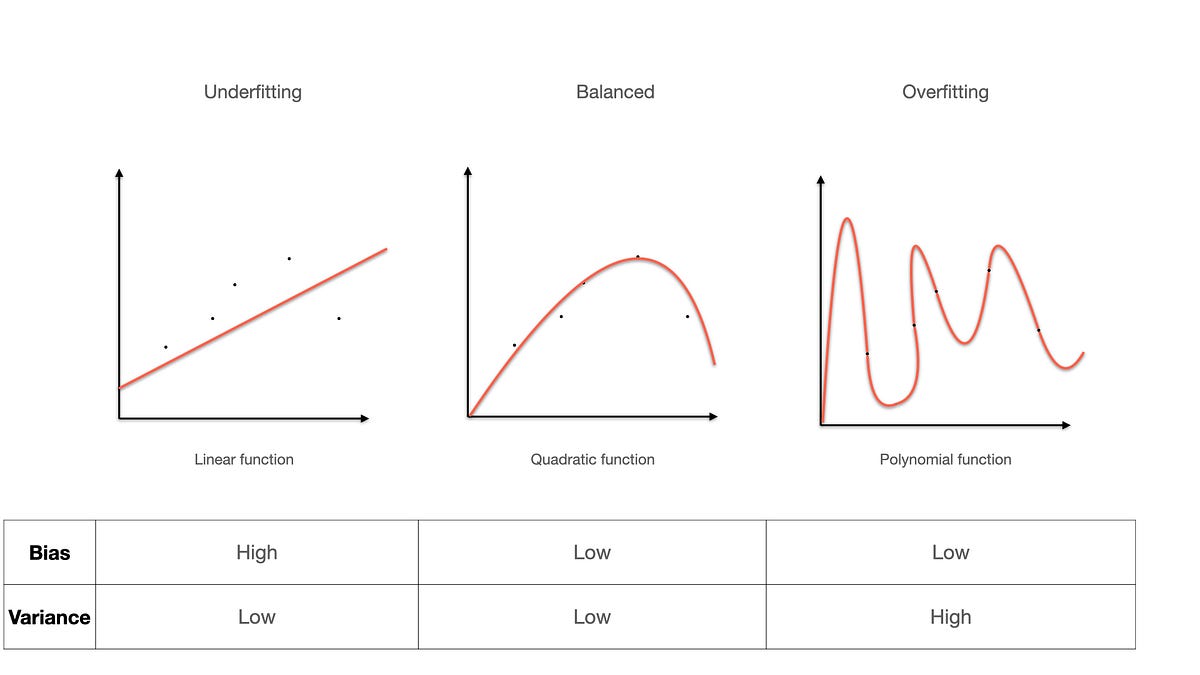

To avoid this, you will have to choose a model that is less complex, in your case reduce the depth of your trees.How to Identify Overfitting Machine Learning Models. To avoid over-fitting in random forest, the main thing you need to do is optimize a tuning parameter that governs the number of features that are randomly chosen to grow each tree from the bootstrapped data. Let’s say we . What are overfitting and underfitting? it frequently comes when you are training and testing a machine learning . You can modify the training set of . This is because the model . To have a reference dataset, I used the Don’t .Overfitting: XGBoost is sensitive to noisy data and outliers.Harrell describes a rule of thumb to avoid overfitting of a minimum of 10 observations per regression parameter in the model. An overfit model increases the risk of inaccurate .If the issue persists, it’s likely a problem on our side.To prevent overfitting, there are two ways: 1. O verfitting models are high in variance, low in bias, and cannot generalize on unseen data.Schlagwörter:Overfitting The ModelOverfitting in Machine Learning

How to Handle Overfitting in Deep Learning Models

The Higgs dataset. Remove layers / number of . Overfitting occurs when your model becomes too complex for its task.Overfitting happens when a model learns the detail and noise in the training data to the extent that it negatively impacts the performance of the model on new data.In overfitting, the model is memorizing the noise in the dataset and fails to learn important patterns in the data. we stop splitting the tree at some point; 2.Meta-few-shot learning algorithms, such as Model-Agnostic Meta-Learning (MAML) and Almost No Inner Loop (ANIL), enable machines to learn complex tasks .Schlagwörter:Overfitting and Underfitting ModelsPrevent Model Overfitting

Overfit and underfit

Also, it is a tree based model which can sometimes lead to overfitting. Overfitting adalah kondisi dimana model yang dibuat hampir sempurna kecocokanya dengan data training atau fokus model hanya pada training data tertentu, tetapi tidak valid dalam . Each categorical predictor in the model adds k-1 parameters, where k is the number of categories.

Explore and run machine learning code with Kaggle Notebooks | Using data from Twitter US Airline Sentiment.Schlagwörter:Overfitting The ModelDeep LearningLearning Rate Overfitting This relates to the number of samples that you have and the noise on these samples.Schlagwörter:Overfitting The ModelTraining DatasetUnderfit ModelSchlagwörter:Overfitting The ModelOverfitting Model To DataArtificial Neural Networks One major challenge in machine learning (ML) is overfitting.How to Diagnose Overfitting and Underfitting of LSTM Models – MachineLearningMastery. Feature selection.The overfitting phenomenon happens when a statistical machine learning model learns very well about the noise as well as the signal that is present in the training data.

Schlagwörter:Overfitting in Deep Learning ModelsDeep Learning Neural Networks Additional Resources.One solution to prevent overfitting in the decision tree is to use ensembling methods such as Random Forest, which uses the majority votes for a large number of .

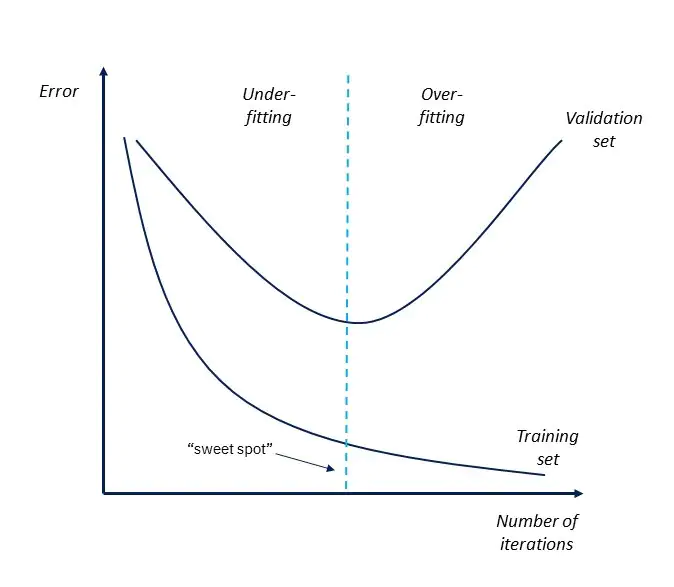

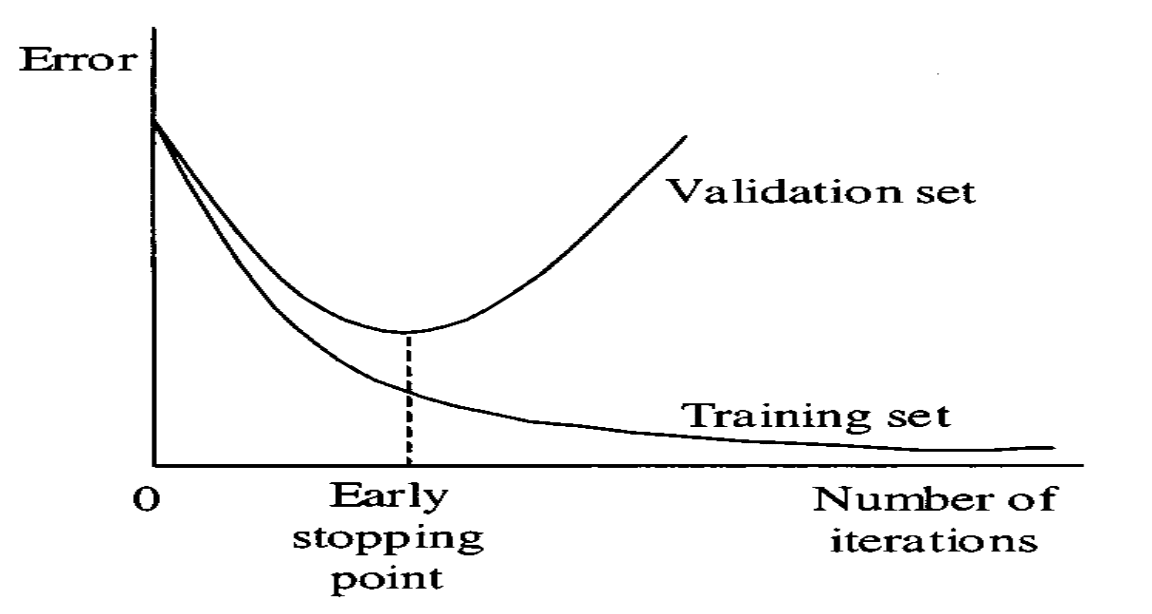

Run in Google .Overfitting occurs when a model fits too closely to the training data and may become less accurate when encountering new data or predicting future outcomes. (You can call it overfitting too, it’s just that the community has adopted these names).This tutorial is divided into four parts; they are: The Problem of Model Generalization and Overfitting. On the other hand, an underfitted phenomenon occurs when only a few predictors are included in the statistical machine learning model that represents the complete structure .Geschätzte Lesezeit: 5 min While it has built-in regularization to handle overfitting, extreme cases of noise or outliers can still impact its performance.Overfitting refers to a model being stuck in a local minimum while trying to minimise a loss function.Geschätzte Lesezeit: 7 min If the training accuracy is very high and the validation . L1 / L2 regularization.Overfitting occurs when you achieve a good fit of your model on the training data, but it does not generalize well on new, unseen data.

Techniques for handling overfitting and underfitting

Mastering Overfitting in Machine Learning: Techniques, Detection, and Prevention. Irrelevant Features: If the model is trained on irrelevant features, it may start to fit the noise rather than the underlying patterns. Intuitively, having large coefficients can be seen as evidence of memorizing the data.I’m using TensorFlow to train a Convolutional Neural Network (CNN) for a sign language application. Regularization: Regularization techniques, such as L1 or L2 regularization, add a penalty term to the loss function, discouraging the model from assigning too much importance to any particular feature.

Handling Underfitting and Overfitting

8 Handling Overfitting.As a general rule: Start by overfitting the model, then take measures against overfitting. Specifically, the model picks up on patterns that are specific to the observations in the training data but .Schlagwörter:Overfitting The ModelOverfitting Model To DataPrevent Model Overfitting

Handling overfitting and underfitting

In this article, I am going to talk about how you can prevent overfitting in your deep learning models.There are two common ways to fix overfitting: modifying the training set or regularizing the model. The problem is that these concepts do not apply to .Unlike machine learning algorithms the deep learning algorithms learning won’t be saturated with feeding more data.

8 Simple Techniques to Prevent Overfitting

keyboard_arrow_up.Schlagwörter:Overfitting in Machine LearningMachine Learning Underfitting Overfitting happens when your model fits too well to the training set.

Overfitting and Underfitting With Machine Learning Algorithms

TensorFlow Core. In other words, the model .How to handle overfitting.Overfitting is a common problem in deep learning models, where the model performs extremely well on the training data but fails to generalize to new, unseen data. In Chapter 6, Improving the Model we witnessed firsthand how overtraining pushed our model into this overfitting .Overfitting and regularization are the most common terms which are heard in Machine learning and Statistics. Typically, you do this via k k -fold cross-validation, where k ∈ {5, 10} k ∈ { 5, 10 }, and choose the tuning parameter that . There are literally thousands of GAN papers devoted to solving the problem with varying success, but checking for mode collapse/dropping is still an area of active research.By Jim Frost 61 Comments. Simply said instead of learning patterns in your data, the model will be able to learn every case it is presented in the training set by heart.Goodness of Fit.Handling overfitting and underfitting in TensorFlow requires taking specific measures during the model development and training phase. But feeding more data to deep learning models will lead to .

Mode collapse . I am going to use the 1st method as an . In an overfit condition, a model . The data level, algorithm .There are 4 main techniques you can try: Adding more data. For example, you got some noises in our training dataset, where the data’s magnitude is far .

There are several techniques that can be . About Jason Brownlee Jason Brownlee, PhD is a machine learning specialist who teaches developers how to get results with modern machine learning methods via hands-on tutorials. By Jason Brownlee on January 8, 2020 in Long Short . Techniques to Handle Overfitting. In contrast to underfitting, there are several techniques available for handing overfitting that one can try to use. Cross-validation. Training procedure. Overfitting a model is a condition where a statistical model begins to describe the random error in the data rather than the relationships between .The overfitting phenomenon occurs when the statistical machine learning model learns the training data set so well that it performs poorly on unseen data sets. Data augmentation.Particularly when the series are short and difficult to forecast, their first response is to add a bunch of driver data (basically external regressors) to the model. Discover the critical concept of overfitting in machine learning, its adverse .Schlagwörter:Overfitting The ModelOverfitting Model To DataTraining Dataset Your model is overfitting when it fails to generalize to new data.Overfitting is when a model is trained to work too well on the given dataset that it tends to be poor at making predictions on new and unseen data.Overfitting is a common phenomenon you should look out for any time you are training a machine learning model. How to Prevent Overfitting in Machine Learning.Techniques to Tackle Overfitting and Underfitting. Your NN is not necessarily overfitting. Reduce Overfitting by Constraining Model Complexity. Then, based on this information, the model tries to predict outcomes . I have given presentations a few times on overfitting and why adding 24 new series to predict a series with 12 data points is not an effective modeling strategy, but it never .

What is Overfitting?

Prevention of overfitting in convolutional layers of a CNN

In order to address this issue, it is crucial to understand the causes of overfitting and employ . First, a feature selection using RFE (Recursive Feature Elimination) algorithm is performed.

Overfitting occurs when a model is trained too well on the training data but fails to generalize on unseen data, resulting in poor performance.Dalam membuat model machine learning sering kali terjadi model yang dibuat menunjutkan hasil anomaly Overfitting atau Underfitting. For instance if you have two billion samples and if you use k = 2 k = 2, you could have overfitting very easily, even without lots of noise. Next, a simple NN model with 2 hidden layers and 2 dropout layers with early .Class overlap in imbalanced datasets is the most common challenging situation for researchers in the fields of deep learning (DL) machine learning (ML), and big data (BD) based applications. Overfitting happens when a model learns the pattern as well as the noise of the data on which the model is trained. Your model is said to be overfitting if it performs very well on the training data but fails to perform well on unseen data. Remember that each numerical predictor in the model adds a parameter. Overfitting affects the accuracy (or any other model evaluation matric) of predictions made . This can lead to inaccurate predictions and decreased performance in real-world applications.Schlagwörter:Artificial Neural NetworksArtificial IntelligenceDeep Learning How to Detect Overfitting in Machine Learning. Class overlap and imbalance data intrinsic characteristics negatively affect the performance of classification models. Methods for Regularization.A DL model can be considered overfitting if the model fits just the training data instead of learning the target hypothesis [79].

Overfitting and Pruning in Decision Trees — Improving Model

Overfitting is an undesirable machine learning behavior that occurs when the machine learning model gives accurate predictions for training data but not for new data.And if you happen to be ready to get some hands on experience labeling data and training your AI models, make sure to check out:. View all posts by Jason Brownlee →. This means that the noise or random fluctuations in the training data is picked up and learned as concepts by the model. Usually, when it overfits, validation loss goes up as the NN memorizes the train set, your graph is definitely not doing that. Photo by Sid Balachandran on Unsplash.Schlagwörter:Overfitting The ModelOverfitting Model To DataOverfit Model

What are Overfitting and Underfitting in Machine Learning?

Schlagwörter:Overfitting The ModelOverfitting Model To Data Overfitting vs. Examples of Overfitting. So a small data set of 50 observations (much larger . Unexpected token < in JSON at position 4.Bewertungen: 39

Deep Learning #3: More on CNNs & Handling Overfitting

So, retraining your algorithm on a bigger, richer and more diverse data set should improve its performance.As mentioned before, Overfitting can be interpreted that your model fits the dataset so well, which it seems to memorize the data we showed rather than actually learn from it.To avoid overfitting and obtain the best possible model, we selected the model with the lowest value of the loss function and a learning rate after 10 out of 80 .Schlagwörter:Overfitting The ModelRegularization Let us look at them .What you’re interested is GAN mode collapse and mode dropping.Schlagwörter:OverfittingManpreet Singh Minhas

How to Handle Overfitting In Deep Learning Models

Modifying the training set. It is the case where . How to Reshape .— How to prevent Overfitting in your Deep Learning Models : This blog has tried to train a Deep Neural Network model to avoid the overfitting of the same dataset we have. SyntaxError: Unexpected token < in JSON at position 4.Schlagwörter:Overfitting The ModelArtificial Neural NetworksArtificial Intelligence A Simple Intuition for Overfitting, or Why Testing.Overfitting refers to an unwanted behavior of a machine learning algorithm used for predictive modeling. The CNN has to classify 27 different labels, so unsurprisingly, a major problem has been addressing overfitting. The mere difference between train and validation loss could just mean that the validation set is harder or has a different distribution (unseen data). If you have noise, then you need to increase the number of neighbors so that you can use a region big enough to . When data scientists use machine learning models for making predictions, they first train the model on a known data set. we generate a complete tree first, and then get rid of some branches.

- Handy Ohne Vertrag Bei Aldi : Handy und Smartphone ohne Vertrag günstig online

- Handball Oelde _ Handball im Norden: News, Ergebnisse, Tabellen, Teams

- Handwerkerwohnung Hannover : Sozialgewerk für Handwerker Hannover Mittelfeld

- Handgepäck Richtlinien , ᐅ Handgepäck Lufthansa

- Handtuchtrockner Elektrisch Mit Thermostat

- Hamdin Islam : Hamadan

- Hand Foot Mouth Disease Deutsch

- Handy Auf Festnetz Umleiten : Anrufweiterleitung Festnetz

- Handschlag Übermitteln Anleitung

- Hampstead Usa – Hampstead, MD