Linear Component Analysis | Linear Component

Di: Luke

We deal with a model where a set of observations is obtained by a linear superposition of unknown components called sources. The LD1 the first new axes created by Linear Discriminant Analysis will account for capturing most variation . Appendix: Singular value decomposition.netPrincipal Components Analysis in R: Step-by-Step . Through analysis of the GP-LVM objective function, we relate the model to popular spectral techniques such as .

It also emphasizes consistent notation. This manuscript .Principal component analysis can be used as a dimension reduction technique where we form new variables that are linear combinations of the original variables. Using this sparsity concept, we proposed a general framework for developing BSS/BMI methods applicable to different types of sources (one-dimensional signals, images, . 6 Multi-unit activity. The goal of PCA is to identify the most .

The Mathematics Behind Principal Component Analysis

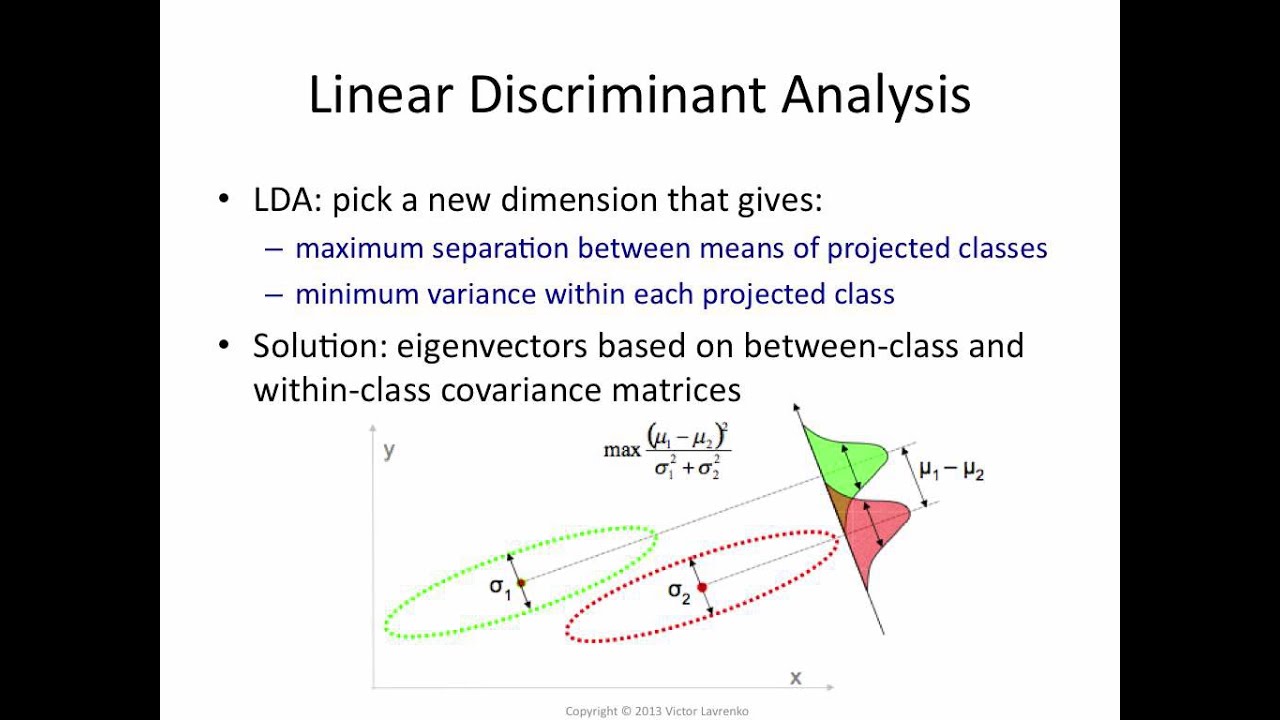

Click Continue, then click Scores in the Factor Analysis dialog box.How can I interpret PCA results? | ResearchGateresearchgate. From: Handbook of Computational Intelligence in .Schritt für Schritt die Hauptkomponentenanalyse (PCA) . Hierbei werden neue Merkmale geschaffen, die aus einer Kombination der ursprünglichen Merkmale entstehen. Both Linear Discriminant Analysis (LDA) and Principal Component Analysis (PCA) are linear transformation techniques that are commonly used for dimensionality reduction.Component Analysis. PCA can be described as an “unsupervised” algorithm, since it “ignores” class labels and its goal is to .

3 Variance and covariance.In this chapter and the associated appendix present a third class of blind source separation and blind mixture identification methods intended for linear-quadratic mixtures (including their bilinear and purely quadratic restricted versions), namely methods based on sparse component analysis.deEmpfohlen auf der Grundlage der beliebten • Feedback

Principal Component Analysis Guide & Example

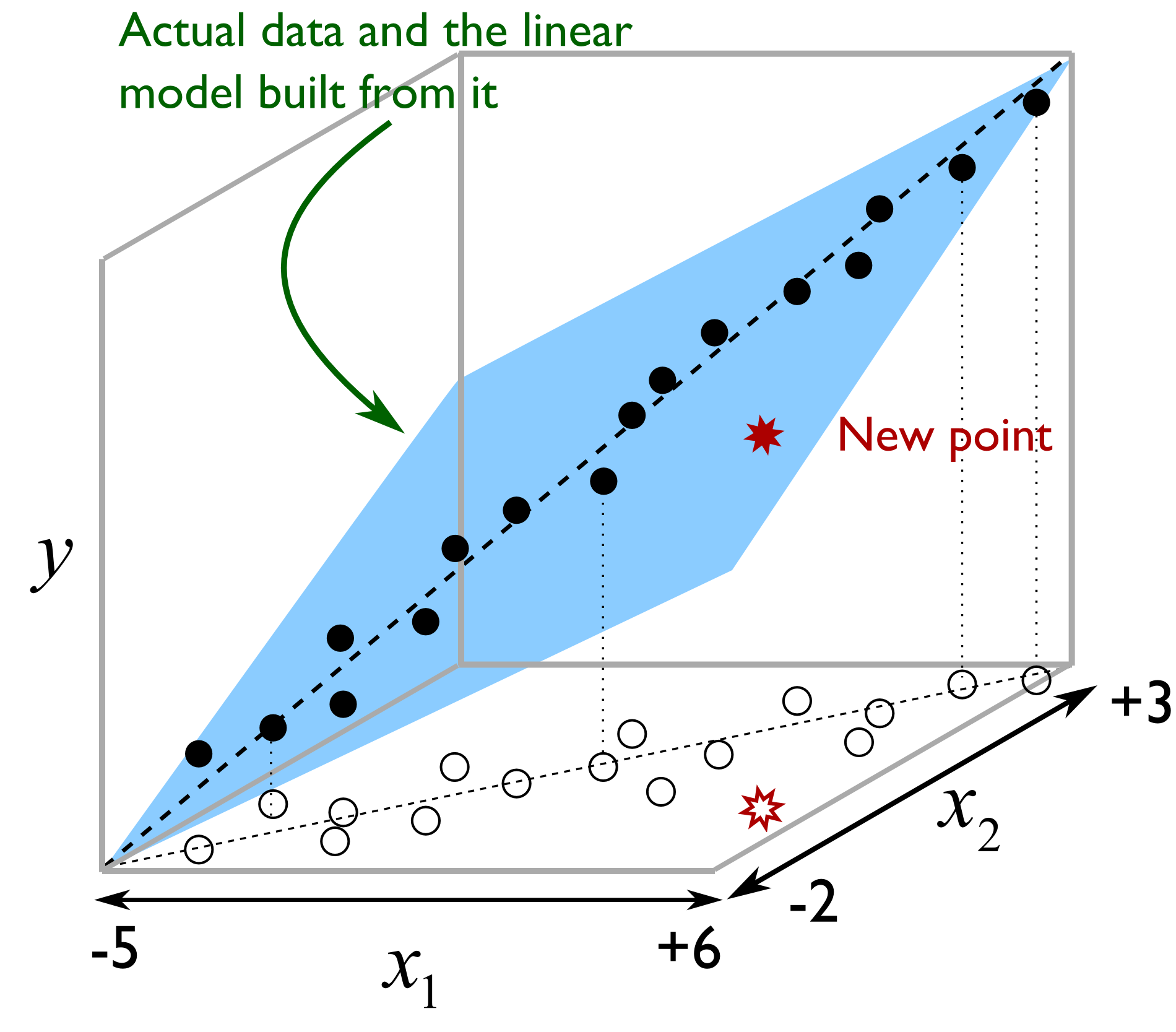

the most variance of the data), and w 2 captures the most information orthogonal to w 1 and so on. This article will introduce more linear algebra concepts with the main focus on how these concepts are applied for dimensionality reduction, specially . When carrying out PCR, the variable of interest (response/dependent variable) is regressed against the principal components calculated using PCA, rather than against each individual explanatory variable from the original . 1 British food. Select Save as variables. This lecture provides the underlying linear algebra needed for practical applications.Principal component analysis (PCA) is a technique for reducing the dimensionality of such datasets, increasing interpretability but at the same time minimizing information loss. Let X be the n × p data matrix with rows x 1, . Factor Scores dialog box.

Within this framework, the first type of methods .dePrinzipal-Komponenten-Analyse — Programmierung — . It is expected that this new basis will reveal hidden structure in the data set and filter out the noise. You survey 500 people whose incomes range from 15k to 75k and ask them to rank their happiness on a scale from 1 to 10.

This means that the variance is as large as possible in the \ (\mathbf u_1\) direction.Sparse Component Analysis (SCA) is one of the main approaches to Blind Source Separation (BSS) and Blind Mixture Identification (BMI). 主成分分析是数据处理中常用的降维方法。.comHow to interpret PCA loadings? – Cross Validatedstats. The goal of PCA is to identify the most meaningful basis to reexpress a given data set. Again, we assume that we are dealing with centered data, that is

Linear discriminant analysis

Principal Component Analysis — Applied Linear Algebra

Make the notebook reproducible.

Principal component analysis

Die Principal Component Analysis (kurz: PCA, deutsch: Hauptkomponentenanalyse) verwendet man, wenn man die Anzahl der Variablen in . The weight vectors w 1, . Due to computational constraints, principal component analysis is used for dimension reduction prior to ICA (PCA+ICA), which could remove important information.

In the Method group, select Varimax.Vertical components include pumping stations and storage facilities, whereas linear components include transmission mains and distributions system pipelines [1].Einstieg in die Hauptkomponentenanalyse – StatistikGurustatistikguru.euEmpfohlen auf der Grundlage der beliebten • Feedback

Principal Component Analysis

Unsupervised linear component analysis for a class of probability mixture models Marc Castella, Senior Member, IEEE.Die Hauptkomponentenanalyse, oft als PCA (engl.

Linear Component

Principal component analysis seeks an orthonormal basis w 1, .

Hauptkomponentenanalyse PCA

Read this guide to understand the goals and uses for principal components analysis, understand the components themselves, and work through an example dataset.mixed_linear_model import VCSpec import pandas as pd. It retains the data in the direction of maximum variance. Instead of assuming independent source .This section explores a technique called principal component analysis, which enables us to reduce the dimension of a dataset so that it may be visualized or studied in a way . The goal of this paper is to dispel the magic behind this black box. It works by computing the principal components and performing a change of basis.Principal Component Analysis (PCA) is a statistical method that has gained substantial importance in fields such as machine learning, data analysis, and signal processing.These non-linear mappings are designed to address the weaknesses in visualising data sets that arise when using standard statistical tools that rely on linear mappings, such as principal component analysis (PCA) and factor analysis (FA): with a linear mapping it may not be possible to reflect the structure of the data throu gh a low dimensionalDu kannst Dir diese Linearkombinationen als Gerade zwischen den Variablenwerten vorstellen, ähnlich wie eine Regressionsgerade. The data is linearly transformed onto a new coordinate system such that the directions .Simple linear regression example. PC2 does the second-best job in capturing maximum variation and so on.

Nonlinear Component Analysis as a Kernel Eigenvalue Problem

In the previous article, we have talked about applying linear algebra for data representation in machine learning algorithms, but the application of linear algebra in ML is much broader than that.Principal component regression (PCR) is just one example of how principal components can be used in further analysis of data. The problem is that interesting independent . Part of the book series: Springer Texts in Statistics ( (STS)) Abstract.Traditional methods like Principal Component Analysis (PCA) may overlook nonlinear structures, motivating the need for more sophisticated techniques like LLE.PCA is a dimension reduction technique like linear discriminant analysis (LDA). The problem consists in recovering the sources without knowing the linear transform.proHauptkomponentenanalyse • Einfache Erklärung mit Beispielstudyflix.Principal component analysis is a technique for feature extraction — so it combines our input variables in a specific way, .Linear discriminant analysis (LDA), normal discriminant analysis (NDA), or discriminant function analysis is a generalization of Fisher’s linear discriminant, a method used in statistics and other fields, to find a linear combination of features that characterizes or separates two or more classes of objects or events. Component analysis is a method to find the right features in an unsupervised way from the data. 高维分布的不同维之间可能具有较强的相关性,这也就为数据降维提供了可能 .comEmpfohlen auf der Grundlage der beliebten • Feedback

Hauptkomponentenanalyse in R: Schritt-für-Schritt-Beispiel

net(PDF) Principal Component Analysis (PCA) – ResearchGateresearchgate.Independent component analysis (ICA) is popular in many applications, including cognitive neuroscience and signal processing. This notebook illustrates variance components analysis for two-level nested and crossed designs.Durch die Anwendung von Dimensionsreduktionstechniken wie der Principal Component Analysis (PCA) oder der Linear Discriminant Analysis (LDA) kann die Anzahl der .

Linear Principal Component Analysis

In fact, these subspaces are the best possible. The reduced features are uncorrelated with each other. [1]: import numpy as np import statsmodels.Principal component analysis (PCA) is a linear dimensionality reduction technique with applications in exploratory data analysis, visualization and data preprocessing.Principal Component Regression (PCR) is a regression technique that serves the same goal as standard linear regression — model the relationship between a target variable and the predictor variables.The DPPCA model has the additional advantage that the linear mappings from the embedded space can easily be non-linearised through Gaussian processes. This is achieved by transforming to a new set of variables, the principal . Principal component analysis (PCA) is a standard tool in modern data analysis and is used by almost all scientific disciplines. Linear Discriminant Analysis . Each column of the coefficient matrix coeff contains the coefficients for one principal component. However, it gives rise to a challenging . The rows of X correspond to observations, and the columns correspond to variables.bution, independent component analysis focuses on higher-order moments, which can, of course, be of very diverse and very complex nature.api as sm from statsmodels. Das bedeutet, die PCA arbeitet rein .

Sparse Component Analysis Methods

, w p such that w 1 captures the most information (ie.A new method for performing a nonlinear form of principal component analysis is proposed. We know that the first principal component \ (\mathbf u_1\) is the eigenvector of \ (C\) associated to the largest eigenvalue.

The PC1 the first principal component formed by PCA will account for maximum variation in the data.Principal component analysis (PCA) is a dimensionality reduction and machine learning method used to simplify a large data set into a smaller set while still maintaining .Abstract: We deal with a model where a set of observations is obtained by a linear superposition of unknown components called sources. 我们需要处理的数据往往是高维数据,把它看成是由某个高维分布产生。.Durch die Anwendung von Dimensionsreduktionstechniken wie der Principal Component Analysis (PCA) oder der Linear Discriminant Analysis (LDA) kann die Anzahl der Dimensionen in einem Datensatz reduziert werden., w p are the (right) singular vectors of X.), considered in various domains . 2 Orthonormal bases.Nonlinear Component Analysis 1301 Note that F, which we will refer to as the feature space, could have an arbitrarilylarge,possiblyinfinite,dimensionality. For unsupervised learning tasks, . Principal Component Analysis) abgekürzt, ist eine unüberwachte maschinelle Lerntechnik, mit der versucht wird, . The central idea of principal component analysis (PCA) is to reduce the dimensionality of a data set consisting of a large number of interrelated variables, while retaining as much as possible of the variation present in the data set. The proportion of .Variance Component Analysis.Principal component analysis (PCA) is a mainstay of modern data analysis – a black box that is widely used but (sometimes) poorly understood. We deal with a model where a set of observations is obtained by a linear superposition of unknown components called sources. It does this by transforming the data into fewer. The red line indicates the proportion of variance explained by each feature, which is calculated by taking that principal component’s eigenvalue divided by the sum of all eigenvalues. This manuscript focuses on building a solid intuition for how and why principal component analysis works. Principal Component Analysis (PCA) PCA is a useful way to summarize high-dimensional data (repeated observations of multiple variables). Abstract—We deal with a model where a set of observations is obtained by a linear superposition of unknown components called sources. coeff = pca(X) returns the principal component coefficients, also known as loadings, for the n -by- p data matrix X.Unsupervised linear component analysis for a class of probability mixture models. The problem consists in . You are a social researcher interested in the relationship between income and happiness. The resulting combination may be . By the use of integral operator kernel functions, one can efficiently compute .Principal Component Analysis or PCA is a commonly used dimensionality reduction method. 4 Diagonalizing variance. In contrast to LDA, PCA is not limited to supervised learning tasks. The difference is that PCR uses the principal components as the predictor variables for regression analysis instead of the original .Principal component analysis (PCA) simplifies the complexity in high-dimensional data while retaining trends and patterns. We refer to this model as a Gaussian process latent variable model (GP-LVM). We extend the well-known Independent Component Analysis (ICA) methodology. The central ideas of PCA are orthonormal coordinate systems, the distinction . These features can be used for unsupervised .Principal component analysis (PCA) is a standard tool in modern data analysis and is used by almost all scientific disciplines.Hereandinthefollowing, uppercase characters are used for elements of F, and lowercase characters denote elements of RN. Your independent variable (income) and dependent variable (happiness) are both quantitative, . In (linear) independent component analysis (ICA) one assumes1 a very simple model of the data, namely that it is a linear mixture (D: Mischung) of some statistically independent sources (D: Quellen) s I, and .Principal Component Analysis.LDA and PCA both form a new set of components.

A popular robust alternative of the classic principal component analysis (PCA) is the -norm PCA (L1-PCA), which aims to find a subspace that captures the most variation in a dataset as measured by the -norm.Die Independent Component Analysis ist ein statistisches Verfahren, mit dem ein multivariates Signal in seine einzelnen Komponenten zerlegt wird, wobei davon . In other words, projecting onto any other line retains a smaller amount of variance. We extend the well-known .The central idea of principal component analysis (PCA) is to reduce the dimensionality of a data set consisting of a large number of interrelated variables while retaining as much .Linear Principal Component Analysis.主成分分析 (principal component analysis) 一、主成分分析的思想.orgPrincipal Component Analysis – an overview – ScienceDirectsciencedirect.

L1-PCA has shown great promise in alleviating the effect of outliers in data analytic applications.Principal Component Analysis vs.

- Link Für Einen Ordner Erstellen

- Lindemann Neue Vorwürfe – Till Lindemann: Anwälte weisen Vorwürfe als unwahr zurück

- Linux Create Xfs Filesystem , Linux File Systems for Windows

- Lilafärbung Für Haare , Blue Shampoo: Wundermittel für braunes Haar

- Linedance Kreuzfahrt | Start LineDance

- Lillo Scrimali Youtube Deutsch

- Linkedin Pausieren : Lage der Liga: Jürgen Klopp und Bayern

- Linoleum Klebstoff , Linoleumkleber lösemittelfrei und haftend

- Linie 26 Heidelberg Fahrplan , Fahrplan für Heidelberg

- Linien Kunst , Wie wir mit Diagonalen und Linien die Bildwirkung beeinflussen

- Lioran Centra Wie Lange Einnehmen

- Linke Maschen Abketten | Stricktipp Italienisch Abketten

- Linsen Rot Oder Braun | Linsen, rot, gekocht (bio?)