What Is Gradient Descent In Machine Learning?

Di: Luke

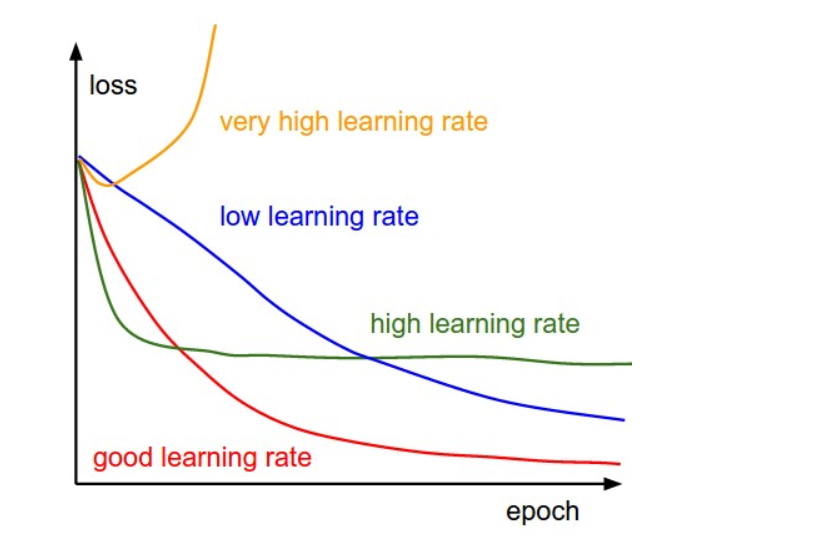

Just like SGD, the average cost over the epochs in mini-batch gradient descent fluctuates because we are averaging a small number of examples at a time. Gradient Descent is an algorithm that solves optimization problems using first-order iterations. The algorithm considers the function’s gradient, the user-defined learning . This lowest point represents the best solution for a machine learning model—usually . Trong Machine Learning nói riêng và Toán Tối Ưu nói chung, chúng ta thường xuyên phải tìm giá trị nhỏ nhất (hoặc đôi khi là lớn nhất) của một hàm số nào đó. 4 min read · Nov 28, 2023–Fernando Jean Dijkinga, M. This issue arises during the backpropagation process, which is used to update the weights of the neural network through gradient descent. For this, you will need a very clear intuition about what . It is used to update the parameters in Machine Learning for example regression coefficient in Linear Regression and weights in Neural Network.comEmpfohlen basierend auf dem, was zu diesem Thema beliebt ist • Feedback

Gradient Descent — Machine Learning Works

Gradient descent is one of the most famous techniques in machine learning and used for training all sorts of neural networks.In machine learning, gradient descent plays an indispensable role by optimizing the degree of accuracy of a model. Introduction to Regularization.

Gradient Descent For Machine Learning

This mechanism has undergone several modifications over time in several ways to make it more robust. In this post you will discover how to use Stochastic Gradient Descent to learn the coefficients for a simple linear . The gradients are calculated using the chain rule and .Gradient boosting is one of the most powerful techniques for building predictive models.

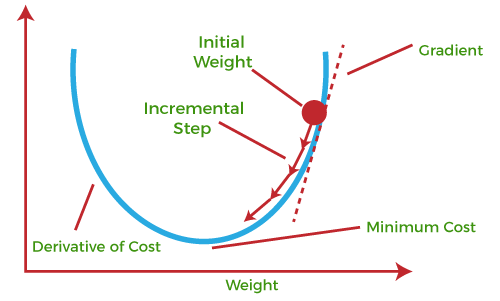

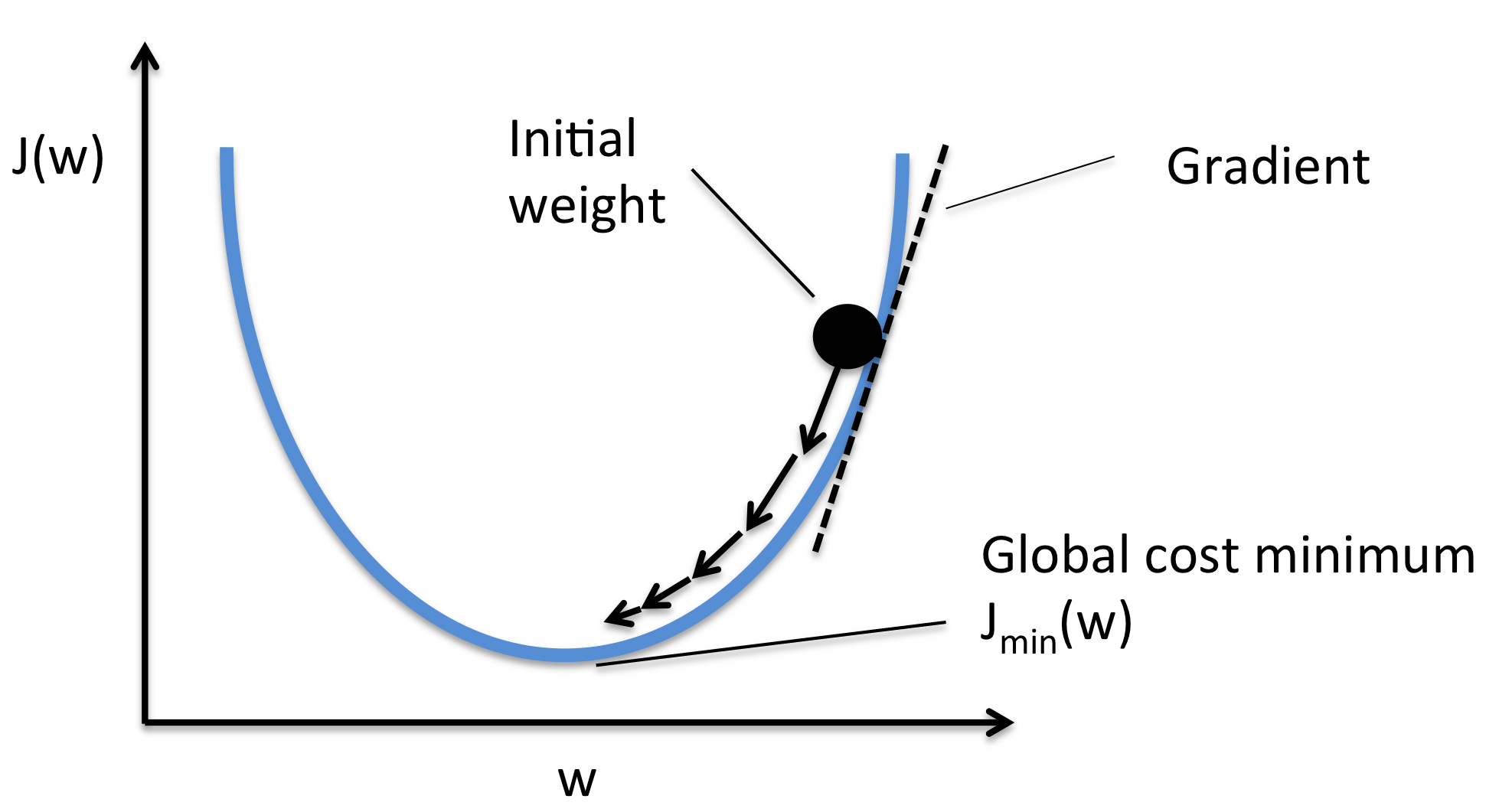

The steps are as follows: 1 — Given the gradient, calculate the change in parameter with respect to the size of step taken. Gradient Descent. It takes into account, user-defined learning rate, and initial parameter values. Mini-batch sizes typically range from 50 to 256, although, like with other machine learning techniques, there is no set standard because it depends on the application. At each iteration, we try .Gradient Descent is defined as one of the most commonly used iterative optimization algorithms of machine learning to train the machine learning and deep learning .

CHAPTER Gradient Descent

In this article, we will be talking about two of them. But gradient descent can not only be used to train neural networks, but many more machine learning models.

Vanishing Gradient Problem Definition

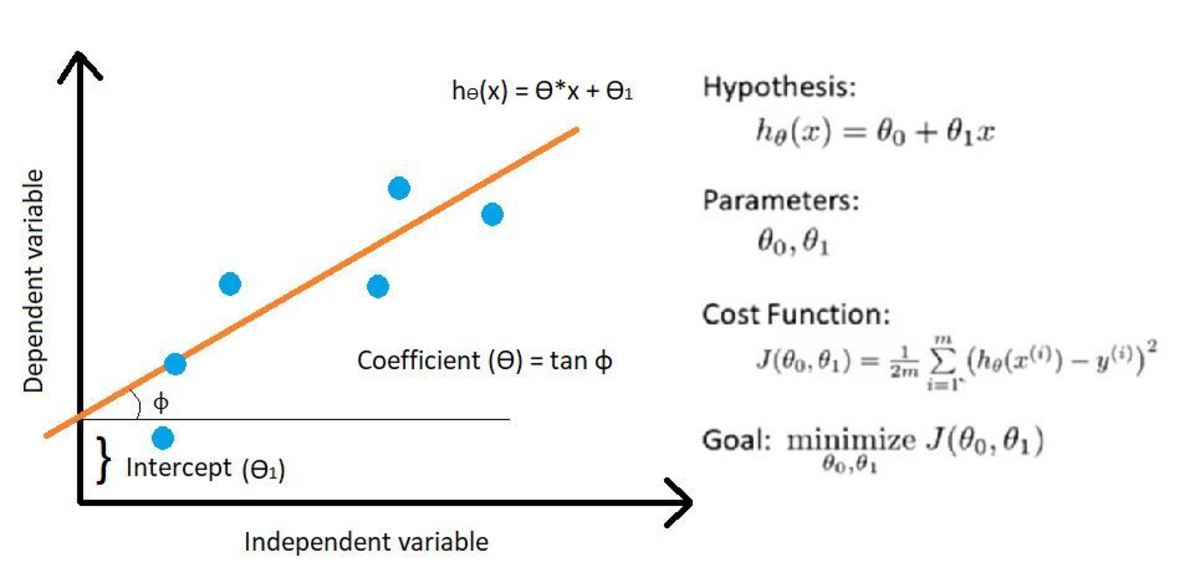

Gradient Descent is a machine learning algorithm that operates iteratively to find the optimal values for its parameters. Parameters refer to coefficients in Linear Regression and weights in neural networks. For example, deep learning neural networks are fit using stochastic gradient descent, and . It is frequently the first optimization algorithm introduced to train machine learning. Let’s dissect the term “Gradient Descent” to get a better understanding of how it relates to machine learning algorithms.Gradient descent is an algorithm you can use to train models in both neural networks and machine learning.

Bài 7: Gradient Descent (phần 1/2)

Intuition Behind the Gradient Descent Algorithm: Let’s use a .Gradient is a commonly used term in optimization and machine learning.Gradient descent is one of the most important algorithms in all of machine learning and deep learning. It uses a cost function to optimize its . 3 — Go back to step 1.

Beginner: Cost Function and Gradient Descent

Gradient Descent is an iterative process that finds the minima of a function. This method is commonly used in machine learning (ML) and .

Gradient Descent: A Guide for Machine Learning

Gradient descent is one of the most powerful optimization algorithm used in machine learning.Calculate the mean gradient of the mini-batch. Getting to grips with the inner workings of gradient descent will therefore be of great benefit to anyone who plans on exploring ML algorithms further.

What Is Gradient Descent?

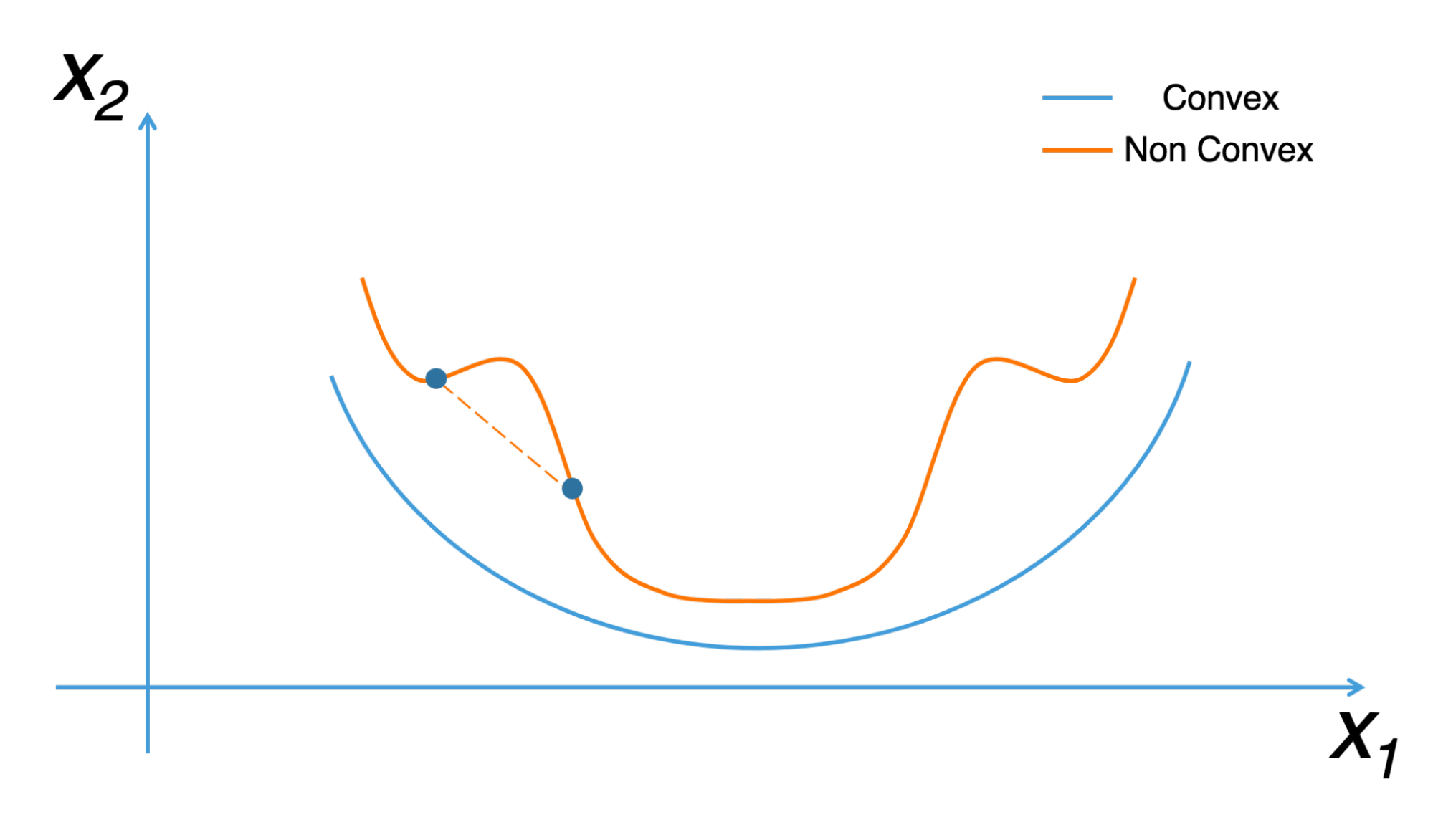

The Gradient Descent Formula. Explaining L1 and L2 regularization in machine learning. If the function we are trying to optimize is convex, for any value of ϵ there is some step size η such that gradient descent will converge to θ* within ϵ of the true optimal θ.Stochastic gradient descent is not used to calculate the coefficients for linear regression in practice (in most cases).

Gradient Descent — A Beginners Guide

Repeat steps 1–4 for the mini-batches we created.

Understanding Gradient Descent for Machine Learning

Then we move in the direction which . Ví dụ như các hàm mất mát trong hai bài Linear Regression và K-means Clustering.

Like in the picture, imagine you’re at the top of a mountain, and your goal is to reach the lowest point. Because once you do, for starters, you will better comprehend how most ML algorithms work.

Gradient Descent is an iterative approach for locating a function’s minima. 10 min read · Jan 2, 2024–Krishna Hariharan.Gradient descent is an iterative method.Gradient descent is a machine learning algorithm that operates iteratively to find the optimal values for its parameters. 2 — With the new value of parameter, calculate the new gradient. To improve a given set of weights, we try to get a sense of the value of the cost function for weights similar to the current weights (by calculating the gradient). As a result, machine learning models can serve as powerful instruments that have the capability to recognize or predict certain kinds of patterns.

Gradient Descent Tutorial

In this article, we understand the work of the Gradient Descent algorithm in optimization problems, ranging from a simple high school textbook problem to a real-world machine learning cost function .

Gradient Descent in Machine Learning: What & How Does It Work

Do you still have the .Gradient Descent in Machine Learning – Javatpointjavatpoint. In particular, gradient descent can be used to train a linear regression model! If you are . Take a look at the diagram above to see the .The gradient descent algorithm is effective because it can help us obtain an approximate solution for any convex function.Gradient Descent is one of the main driving algorithms behind all machine learning and deep learning methods.Gradient Descent Algorithm.

Gradient Descent Algorithm Explained

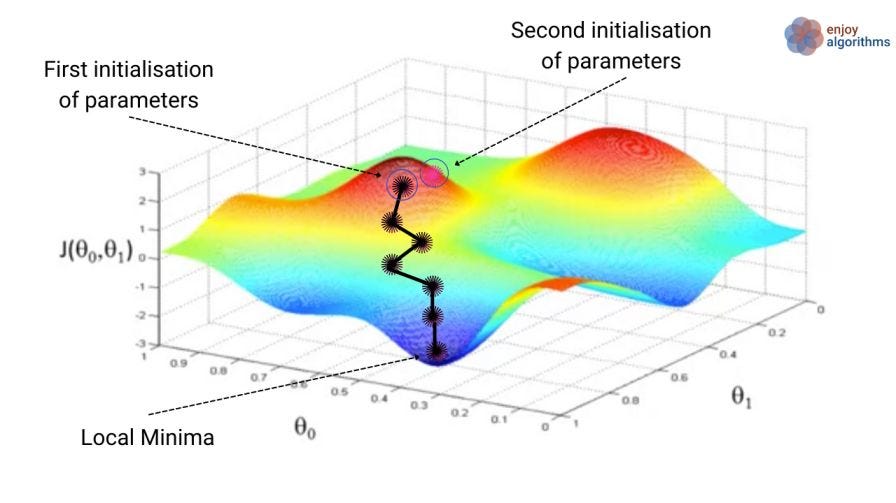

Depending on where we start at the first point, we . We start with some set of values for our model parameters (weights and biases), and improve them slowly. In machine learning, we use gradient descent to update the parameters of our model. The goal of Gradient Descent is to minimize the objective convex function f (x) using iteration.Gradient descent is widely used in machine learning to optimize various types of models, such as linear regression, logistic regression, neural networks, support vector machines, and more . Imagine you’re hiking in a vast, foggy mountainous landscape and your goal is to find the lowest point in a valley.Gradient Descent

Gradient Descent Algorithm — a deep dive

That is b is the next position of the hiker while a represents the current position.Source: Coursera.This strikes a balance between batch gradient descent’s effectiveness and stochastic gradient descent’s durability.Gradient descent is an important algorithm to understand, as it underpins many of the more advanced algorithms used in Machine Learning and Deep Learning.The vanishing gradient problem is a challenge faced in training artificial neural networks, particularly deep feedforward and recurrent neural networks. The insight into the gradient descent algorithm is crucial to solidify your .

The takeaway here is the initial values and learning rate. Let’s take an example .So in the context of machine learning, Gradient Descent refers to the iterative attempt to minimize the prediction error of a machine learning model by . Nhìn chung, việc tìm global .Gradient descent relies on negative gradients. It iteratively .Gradient descent is a popular optimization algorithm used in machine learning to find the best solution or parameters for a particular model. In machine learning, gradient descent is used to update .Gradient descent is an optimization algorithm used to minimize some function by iteratively moving in the direction of steepest descent as defined by the negative of the gradient. I definitely believe that you should take the time to understanding it. This is an optimisation algorithm that finds the parameters or coefficients of a .Gradient descent is a process by which machine learning models tune parameters to produce optimal values.Gradient descent is, with no doubt, the heart and soul of most Machine Learning (ML) algorithms.Gradient Descent. A gradient is a measurement that quantifies the steepness of a line or .

An Introduction to Gradient Descent and Backpropagation. In this post you will discover the gradient boosting machine learning algorithm and get a gentle introduction into where it came from and how it works. It is an extremely powerful optimization algorithm that can train linear regression, logistic regression, and neural . The minus sign is for the minimization part of the gradient descent algorithm since the goal is to . Linear regression does provide a useful exercise for learning stochastic gradient descent which is an important algorithm used for minimizing cost functions by machine learning algorithms.Gradient descent is an optimization algorithm that works iteratively to find the model parameters with minimal cost or error values. Besides, understanding basic concepts is key for developing intuition about more .Gradient Descent is an optimizing algorithm used in Machine/ Deep Learning algorithms.In conclusion, gradient descent is a way for us to calculate the best set of values for the parameters of concern.Gradient Descent is a fundamental optimization algorithm in machine learning used to minimize the cost or loss function during model training.

Gradient Descent for Linear Regression Explained, Step by Step

Many algorithms use gradient descent because they need to converge upon a parameter .comGradient Descent Algorithm | How Does Gradient Descent . This is an optimisation approach for locating the parameters or coefficients of a function with the lowest value. For example, this can be the case when J( ) involves a more .In machine learning, when gradient descent can’t reduce the cost function anymore and the cost remains near the same level, we can say it has converged to an optimum.In machine learning , Gradient Descent is a star player.Gradient descent (GD) is an iterative first-order optimisation algorithm, used to find a local minimum/maximum of a given function. Many of us have been pretty much familiar with what gradient descent .Gradient descent is a widely-used optimization algorithm that optimizes the parameters of a Machine learning model by minimizing the cost function. The most popular kind in deep learning, this method is .In the context of machine learning, gradient descent refers to an iterative process that is responsible for locating a function’s minima.analyticsvidhya.

What is Gradient Descent? Gradient descent is an optimization algorithm used in machine learning to minimize the cost function by . In the previous chapter, we showed how to describe an interesting objective function for machine learning, but we need a way to nd the optimal = argmin J( ), particularly when the objective function is not amenable to analytical optimization. Towards Data Science. In this article, you’ll discover the magic behind mini-batch gradient descent. To determine the next point along the loss function curve, the gradient descent algorithm adds some fraction of the .

What Is Gradient Descent in Machine Learning?

This function, however, does not always discover a global minimum and can become trapped at a local minimum.

Polynomial Regression — Gradient Descent from Scratch

Gradient descent is an optimization algorithm mainly used to find the minimum of a function. Here’s the formula for gradient descent: b = a – γ Δ f (a) The equation above describes what the gradient descent algorithm does. After reading this post, you will know: The origin of boosting from learning theory and AdaBoost.Gradient descent is a popular optimization algorithm that is used in machine learning and deep learning models such as linear regression, logistic regression, and . The algorithm considers the function’s gradient, the user-defined learning rate, and the initial parameter values while updating the parameter values. Since it is designed to find the local minimum of a differential function, gradient descent is widely used in machine learning models to find the best parameters that minimize the model’s cost function.Mini-Batch Gradient Descent: Optimizing Machine Learning. It’s an optimization algorithm used to minimize a function by iteratively moving towards the steepest descent as defined by the negative of the gradient. The number of iterations for convergence may vary a lot.Stochastic Gradient Descent is an important and widely used algorithm in machine learning.

Gradient Descent is a standard optimization algorithm. Use the mean gradient we calculated in step 3 to update the weights.

- What Is Copy And Paste : How to Copy and Paste in CorelDRAW

- What Is Choice Of Jurisdiction In Private International Law?

- What Is Multiplexing : The Ultimate Guide to Multiplexing and Demultiplexing

- What Is Catalyst In Apex Legends?

- What Is Energy , What is energy and types of energy

- What Is Jupiter Ascending About?

- What Is Minecraft Vs Zombies 2?

- What Is No Smoking? | No Smoking Day 2024

- What Is Diabetic Nephropathy _ Diabetic Kidney Disease

- What Is Jefferson Airplane Known For?

- What Is Damri Airport Shuttle?

- What Is Drug Fever | Arzneimittelfieber